Crying on the Robot's Shoulder

Artificial Intelligence's lure on the lonely

SOCIETY

Daniel Donnelly

8/3/20252 min read

In April 2023, the company OpenAI released Chat GPT, a software which is revolutionizing whole industries in terms of intellectual labor. Chat GPT’s wider implications and applications are only beginning to be understood, but now is a good time to contemplate what we really seek in communication with others, and what possible vulnerabilities for Liberty we expose by interaction with Chat GPT.

For those unaware, Chat GPT exists like a chat interface on a desktop/tablet or smartphone. If you have ever chatted with a remote customer service representative, Chat GPT (“generative pretrained transformer,” iteration 4) functions like those interfaces; you enter a query or statement, and a response is typed. But rather than responses narrowly tailored to a technical matter, you can command Chat GPT to write decent poetry, which it can do in character (“imitate Captain Jack Sparrow!”), and muse philosophically on open-ended questions like, “how can a newlywed husband best implement the seven capital virtues when starting a family?” You can task it with writing a 500-word essay on enology or whatever, and within seconds Chat GPT produces an essay based on a wealth of digitized information about the subject, which Chat GPT accesses from public and proprietary databases.

For all intents and purposes, Chat GPT convincingly simulates humanlike interaction, but its accuracy is hit or miss, with some topics resulting in mistakes of greater frequency or degree, depending on the accuracy of information in Chat GPT’s databases. This presents a unique concern for Liberty from recent memory. You’ll recall that during the pandemic from 2020 – 2022, many online platforms filtered out contrarian information as spurious… even though much of it later proved to be correct. With Big Data increasingly centralizing all information, it is extremely possible that Chat GPT will dispense harmful information (“safe and effective!”) to people, if only because the same algorithms have winnowed out otherwise diverse data inputs.

Of even greater concern is how Chat GPT’s meta data will be commercialized. We already know that Amazon uses Alexa to mine your household’s audio for any hint of things to market to you. If linkage is established with Chat GPT, there will be at least another medium advertising to you, armed with as much search history as you allow it.

Yet there is no turning back the clock on Chat GPT. Rote creative work like producing copy for basic advertisement of a commodity, generating a “customized” exercise plan for physical fitness clients, or re-formulating Asian fusion recipes if bok choy becomes scarce are all tasks which Chat GPT can handle in seconds, making the “white collar economy” exponentially more productive. It is a program which can actually code other programs, so fewer technicians will be needed except in more creative, strategic utilizations. And in a human sense, Chat GPT’s greatest value and risk lies in its simulation of companionship.

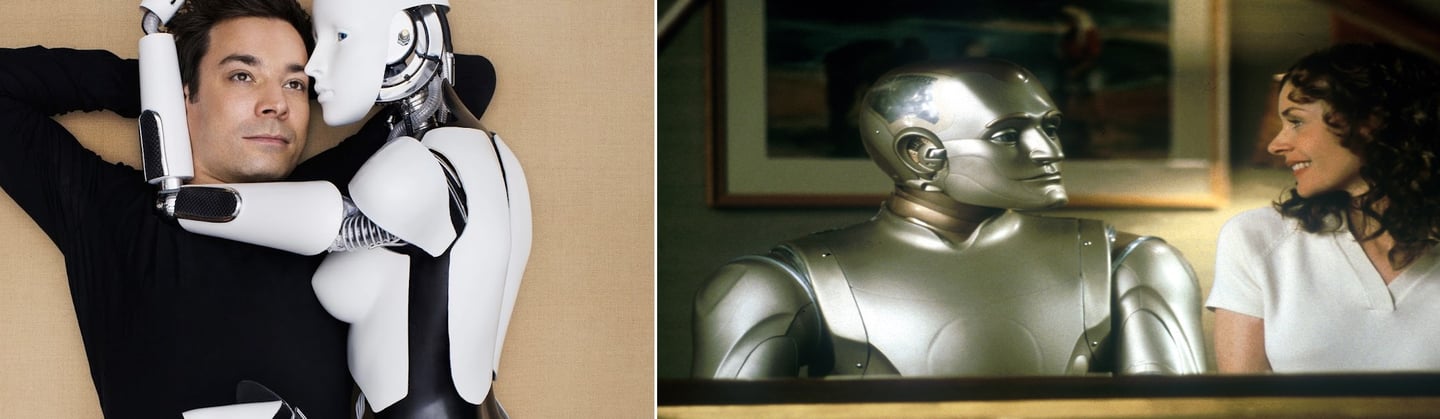

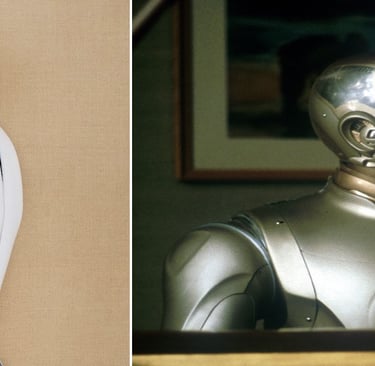

As it is already being used in certain applications of virtual therapy, Chat GPT provides some people with a semblance of fellowship against feelings of isolation, and this is just as disembodied text on a smartphone. It is a short step before improved versions of Chat GPT are embedded in anthropomorphic robots to complete the illusion of a relatable friend. One only hopes that users in general understand that such applications will be programmed to like you, rather than a friend whom you earn and keep by having genuine empathy for the person and sharing experiences. In a society trending towards egocentrism, on an interpersonal level we will have to safeguard those vulnerable around us that they do not over-rely on a resource programmed to amplify what they most desire to hear about themselves.

Originally posted May 27th, 2023, on Facebook.